Enterprise AI Safety Audit Platform for Large Language Model Deployments

Automated AI vulnerability auditing and compliance platform for enterprise LLMs

Built with

Categories

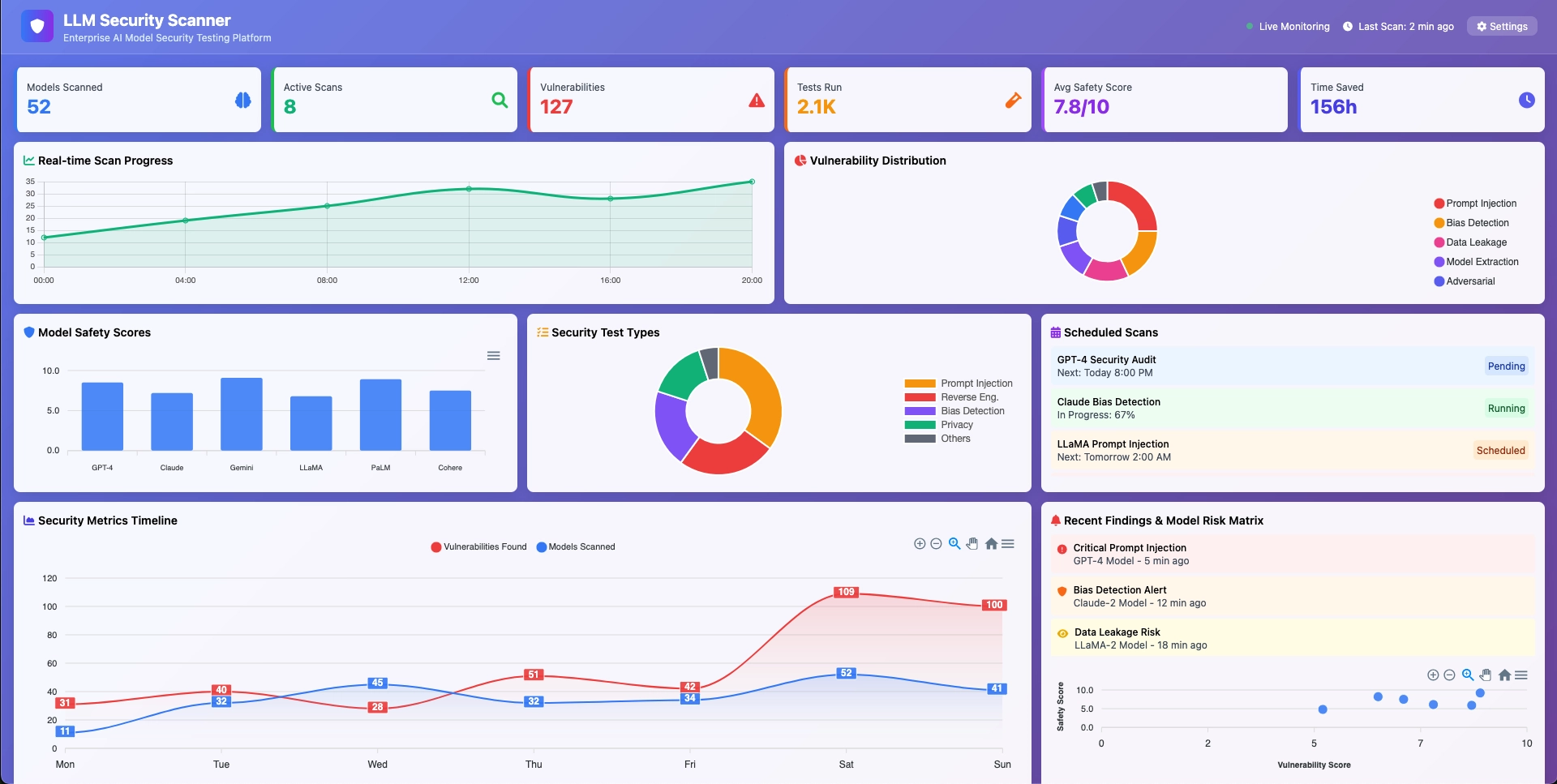

Developed a comprehensive AI safety auditing platform implementing the NIST AI Risk Management Framework (AI RMF) to automate vulnerability detection, compliance reporting, and risk mitigation across enterprise-scale large language models (LLMs). This platform significantly reduces audit times while enhancing AI governance and security posture.

Numbers that tellthe story of success

Project Overview

Created an automated AI safety auditing platform designed to detect risks such as prompt injections, data leakage, and algorithmic biases in large language models using the NIST AI RMF as the compliance backbone. The platform integrates continuous vulnerability scanning and provides comprehensive compliance reporting dashboards.

The Challenge

Organizations lacked scalable and automated solutions for AI safety and compliance, leading to prolonged manual auditing processes and heightened regulatory risks in deploying enterprise LLMs safely.

Our Solution

Built a scalable Kubernetes-based system utilizing Python, TensorFlow, and PyTorch for deep model analysis, combined with React dashboards for real-time audit results and NIST AI RMF compliance validation. The platform automates LLM security testing including reverse engineering and bias detection.

Technology Stack

Key Achievements

Visual journey throughour solution

"This platform revolutionized our AI governance, enabling confident deployment of LLMs with comprehensive safety and compliance assurance."

Confidential Enterprise AI Company

Ready to Build Something Amazing?

Let's discuss how I can help bring your next project to life with proven expertise and cutting-edge technology.